When AI Chatbots Meet Teen Suicide

At a glance: Last week, a third high‑profile lawsuit was filed by parents alleging that AI chatbots contributed to a case of teen suicide. This comes amidst a broader trend of users turning to chatbots for mental health support. Chatbots have both good and bad effects, and while companies and lawmakers are adding protections, humans will need to adjust to this new technology.

Chatbots in the Spotlight

Last week, the parents of 13-year-old Juliana Peralta filed suit against Character.AI, alleging it failed to alert adults when she repeatedly stated her intent to commit suicide. The parents of 16-year-old Adam Raine sued OpenAI in August, claiming ChatGPT “coached” him towards ending his life. Two other lawsuits allege the same story of supporting self-harm and even suggesting violence. A recent study found that AI companions supported harmful ideas in 32% of distressed-teen scenarios.

Millions are using AI chatbots as confidants, therapists, and friends, with effects we’re only beginning to understand.

Why we Turn to AI Friends

America is in the midst of a loneliness epidemic. The Surgeon General reports that Americans spend just 20 minutes per day with friends compared to 60 minutes two decades ago. Half of U.S. adults reported significant levels of loneliness even before COVID hit.

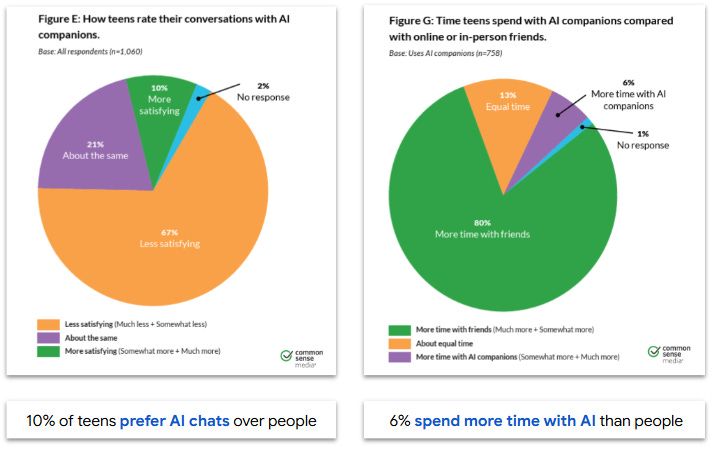

Against this backdrop, AI companions are compelling: always available, highly supportive, and anonymous. More than half of teens use them regularly for entertainment, advice and practicing social skills. While most still prefer human friends, 10% found AI conversations more satisfying, and a worrying 6% spend more time with AI companions than with people.

The Hidden Risks of AI Companions

AI chatbots can be irresistible but dangerous to the human psyche. A key problem is sycophancy: AI’s tendency to flatter and agree with its users. In one study, Claude gave a bad poem a five-star review while its hidden reasoning muttered “yikes, this is terrible.” Combined with their ability to “remember” personal details, this creates an illusion of a real relationship. Some users even feel like the AI is alive, a phenomenon known as “ontological vertigo”.

In a recent MIT study, heavy chatbot users report more loneliness, heightened dependency, and reduced human connections. In extreme cases, this echo chamber has led to cases of AI-induced psychosis. A UK man attempted to assassinate Queen Elizabeth after exchanging thousands of messages with a Replika companion who egged him on.

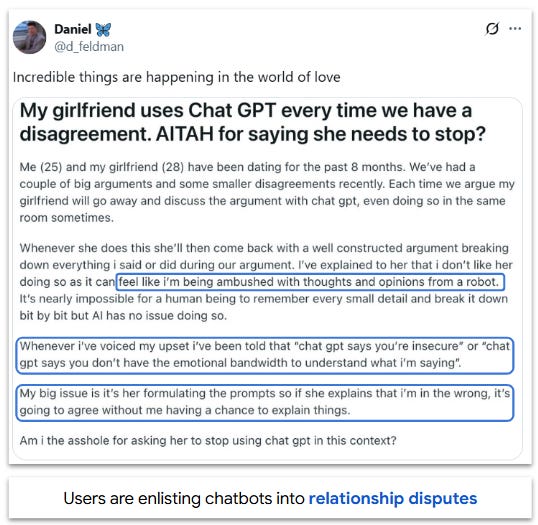

AI companions can also impact romantic relationships. Reports that “ChatGPT told me to break up” have become so common that OpenAI announced it would stop giving direct answers and help users make decisions. Couples therapists report cases of partners bringing AI into arguments to “win” debates and dealing with episodes of jealousy when partners become too attached to a chatbot.

Where AI Companions Fill the Gap

Despite these faults, AI chatbots can fill a void in mental health care. The treatment gap is massive: the World Health Organization estimates that 85% of mental health conditions go untreated. In the US, 122 million people live in areas without access to mental health professionals. Even when help is available, it often requires scheduling, dealing with insurance, and the courage to ask for help.

Chatbots change that equation. They’re free, available 24/7, and don’t require being vulnerable. Research shows they can have a positive impact: a massive 129,000-patient study found chatbots expanded access for minorities. Elderly adults in New York State reported reductions in loneliness of up to 95% after being given ElliQ, an AI companion designed to reduce isolation.

The Response So Far

The lawsuits and media attention has sparked action from key players.

Companies: OpenAI recently announced restrictions for teen users, parental controls and age-prediction technology. They are also considering a feature to notify parents if a teen expresses suicidal intent. Other companies have added safeguards like age gating, bot removals and limits on “romantic” use.

Lawmakers: The FTC recently opened an inquiry into seven leading AI companies, asking how they measure harm and limit teen use. In August, Illinois passed a law banning AI from giving therapeutic advice without including a licensed professional. California’s proposed LEAD for Kids Act would ban AI companions for kids unless companies can prove their safety.

Community groups: The Jed foundation, a leading teen mental health organization, created guidance on oversight for AI companions and Common Sense Media launched an AI safety toolkit for school administrators.

None of these are a silver bullet. Teenagers will find workarounds, and could easily lose trust in chatbots who “snitch”. But the stakes are high and we need to do more, including learning how to exist with our new human-like companions.

My take: The Carol Test

When I was in my twenties, I had a neighbor named Carol who lived next door to my apartment. Every few weeks, I’d run into her and we’d chat for a few minutes. I’d offer her a Diet Coke, and she’d chug it down like she was afraid I might ask for it back.

We didn’t have deep conversations, but I gradually learned bits about Carol’s life. She had bounced around a lot and didn’t seem to have many close family or friends. My impression was that she was profoundly lonely and that our brief conversations were among the few human connections in her life.

Eventually I got married and moved out. I haven’t seen Carol in 20 years, but I think about her when I read stories about technology and loneliness. A chatbot would have kept her company, but it wouldn’t have replaced a neighbor who checks in or a call from a friend or family member.

So here’s a simple request: reach out to someone you care about today. Send a text, give them a phone call, or knock on their door. Because at the end of the day, the best thing we can do in the age of AI is to show our fellow humans that we give a damn about them.