The AI Slopalypse Spreads to the Office

GP-Too Much Information

At a Glance: Last week, Stanford released a report titled “AI-Generated “Workslop” Is Destroying Productivity”. It investigated the growing trend of AI generated content that masquerades as good work, but lacks the substance to meaningfully advance a given task. This trend has many implications, and this edition focuses on how you can thrive amid the rise of AI in your company.

GP-TMI: Too Much Information

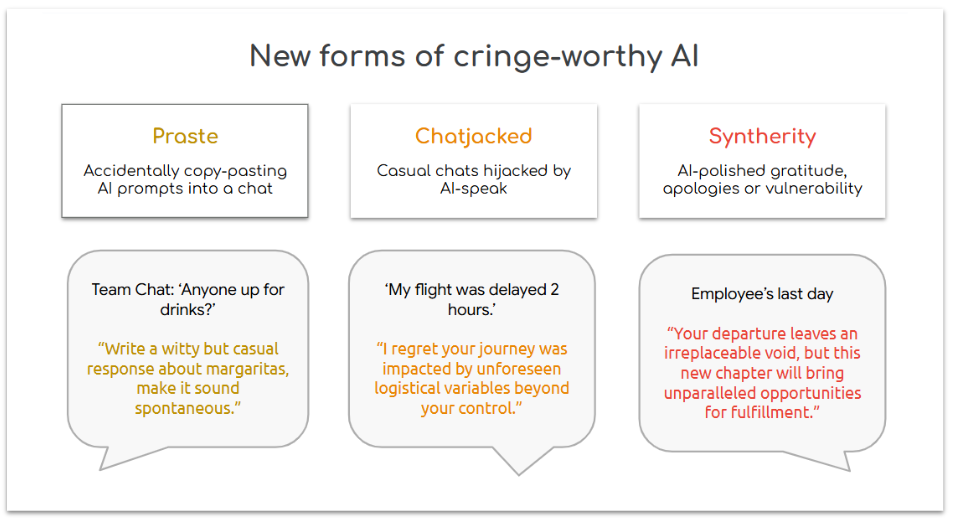

When I was interviewing for my role at UW, I asked a former colleague to grab coffee and share advice. We couldn’t find a convenient time so he sent me a long email calling out the accomplishments I should highlight. It quickly dawned on me that he had used AI to write it. Not only was it crawling with em-dashes (“–”, a hallmark of AI writing), but it included bullets that were embedded deep in my linkedin profile. This is known as GP-TMI, or a hyper-personalized AI message that feels creepy because it goes beyond human detail. Here are some other examples of this awkward AI moment.

The Slopalypse arrives

The Stanford report surveyed workers to understand the extent of workslop. They found that 41% had received it in the past month, and it made up about 15% of all content.

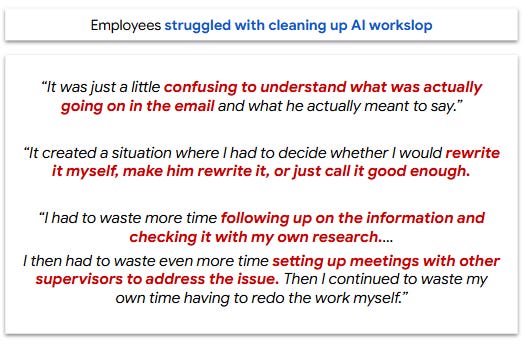

This hollow content has a cascading effect, creating more work for downstream employees who spent an average of two hours decoding, fact-checking or completely redoing AI-generated work. Stanford estimated this “AI tax” costs a company with 10,000 employees $9 million per year in lost productivity.

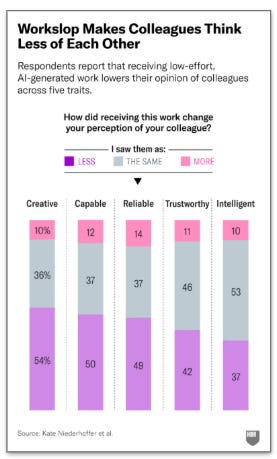

The impact went beyond lost productivity. Over half of respondents were annoyed when they received AI content and saw colleagues as less creative, capable and reliable.

This reputational damage isn’t equally distributed. Recent HBR research on software engineers found the credibility hit was twice as harsh for women and older developers than younger male coworkers.

There are a lot of factors behind this, from companies mandating AI use without training and guidance to measuring usage over business impact. But for this article, I’m going to share four ways you can thrive in the impending slopalypse.

When to use AI: The SPUD model

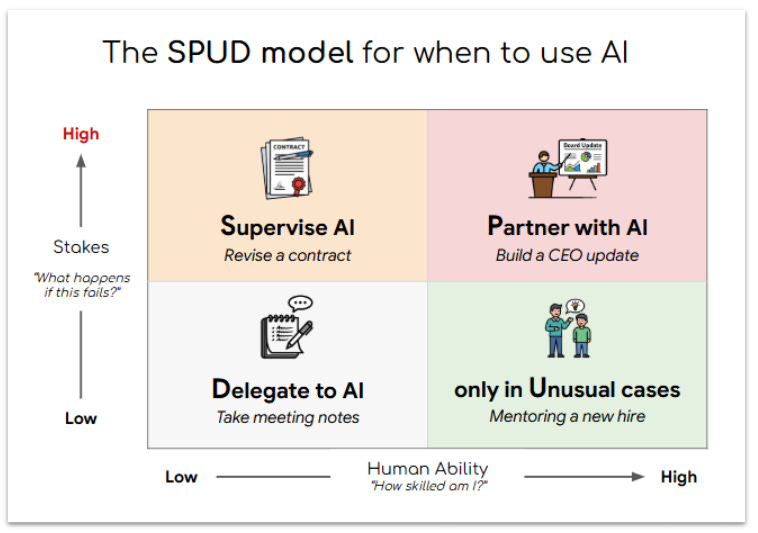

Chatbots are the ultimate try-hards, and will do pretty much anything you ask of them. But when should you? I recommend a model based on: the stakes (what happens if this fails?) and your ability (how skilled am I?). Here’s how it goes:

Supervise AI when the stakes are high but your skill is low (ex: Reviewing a contract). This is a binding document and you’re not a lawyer. Have AI suggest changes that you officially request.

Partner with AI when both stakes and skill are high (ex: Preparing a CEO update). This is critical and you’re the expert. Use AI for research, drafting and feedback to make it bulletproof.

Use AI for unusual cases when stakes are low but your skill is high (ex: Mentoring a new hire). You have deep expertise to share, but occasionally hit edge cases you haven’t seen before.

Delegate to AI when both stakes and skill are low (ex: Capturing meeting notes). These are nice to have, and not your strength. Let AI handle it.

How to use AI well: Pumping Iron

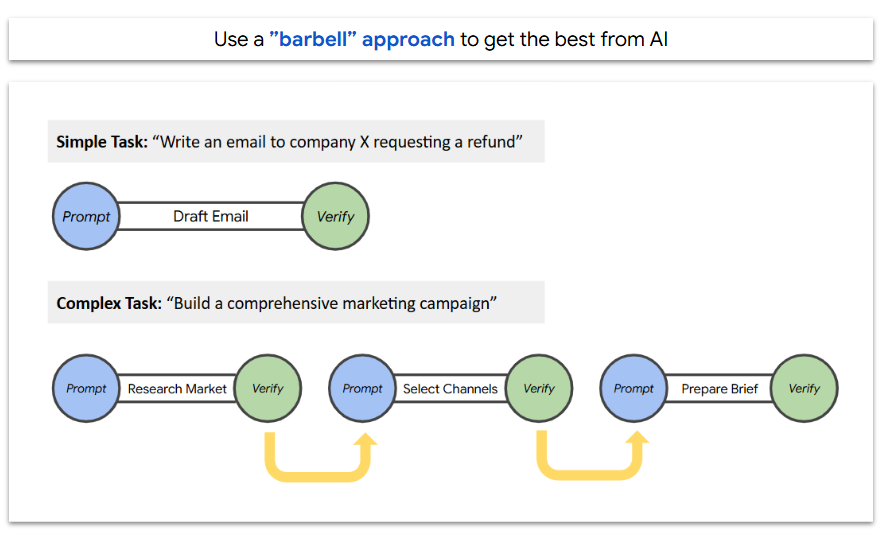

A great approach for working with AI is the barbell model. One end of the barbell is prompting, or giving it robust context and detailed questions. The second end of the barbell is verification, or checking facts, trimming fluff and making it sound like you. AI is the heavy bar in the middle.

For example: don’t just say “write an email requesting a refund”. Instead, tell it “Draft a firm email to Best Buy requesting a refund for the wireless earbuds I bought on September 12. The right earbud does not charge.”

Then verify: check the order number, the email address and trim the overly wordy output you’re sure to get.

One other note: AI works great for simple tasks but struggles with complex ones. Instead of asking it to “build a marketing campaign”, break it down into smaller steps you can guide and review.

Sending Without the Stigma

When sending AI-influenced work, transparency in light of negative opinions of AI. Acknowledge your use and show you’re open to feedback:

Acknowledge: “Here’s a draft I created (with help from AI”)

Offer Opt-out: “Here’s a potential starting point courtesy of ChatGPT that might help.”

Probing the Prompt

When you suspect something is AI written, things are touchier. Here’s some language you can use to broach the subject:

Acknowledge: “Wow, this is so in-depth!”

Probe: “Any chance AI was involved with this?”

Challenge: “I feel like we’re jumping straight to writing. Could we discuss the overall picture first?”

These are actual lines I’ve used! Feel free to borrow and make them your own. Adjust as needed to fit your style and the situation.

My Take: From Slop to Substance

Mark Twain famously wrote “I didn’t have time to write you a short letter, so I wrote you a long one.” AI chatbots are the perfect embodiment of this.

I spend a good part of each weekend writing this newsletter, and I’ve tried AI at every stage. It’s a great partner for research, ideation and feedback.

It regularly offers to write my newsletter, which is tempting on a Sunday afternoon when the Bears are playing. But its writing is, well, sloppy: long and inauthentic. (And its Dad jokes are worse than mine!) Those editions wouldn’t be worth your time and I wouldn’t be proud of them.

The AI slopalypse is here, but you don’t have to be a casualty. By following the SPUD model and being transparent, you can build your reputation instead of damaging it.

Dad Joke: What did people call Joe Rogan’s ten hour AI generated podcast? The “Sloppy Joe Experience” 🤣