Enterprise AI: Your 2026 Planning Guide

Escaping Pilot Purgatory

Mark’s AI strategy deck from March has eight slides. Four are already obsolete. The board meeting is in three weeks.

His VP messaged him twenty minutes ago. “CEO just talked with our favorite activist board member. He read the McKinsey AI report and thinks we’re falling behind. They want a deep-dive review of the AI plan at our next board meeting. I need you to take the lead on the deck.”

Mark’s shoulders drop. So much for the two-week holiday cabin rental he just booked.

He looks through the deck. Slide three: Copilot rollout. Done. Slide six: Customer service chatbot. Paused indefinitely. Three weeks to get ready for the firing squad.

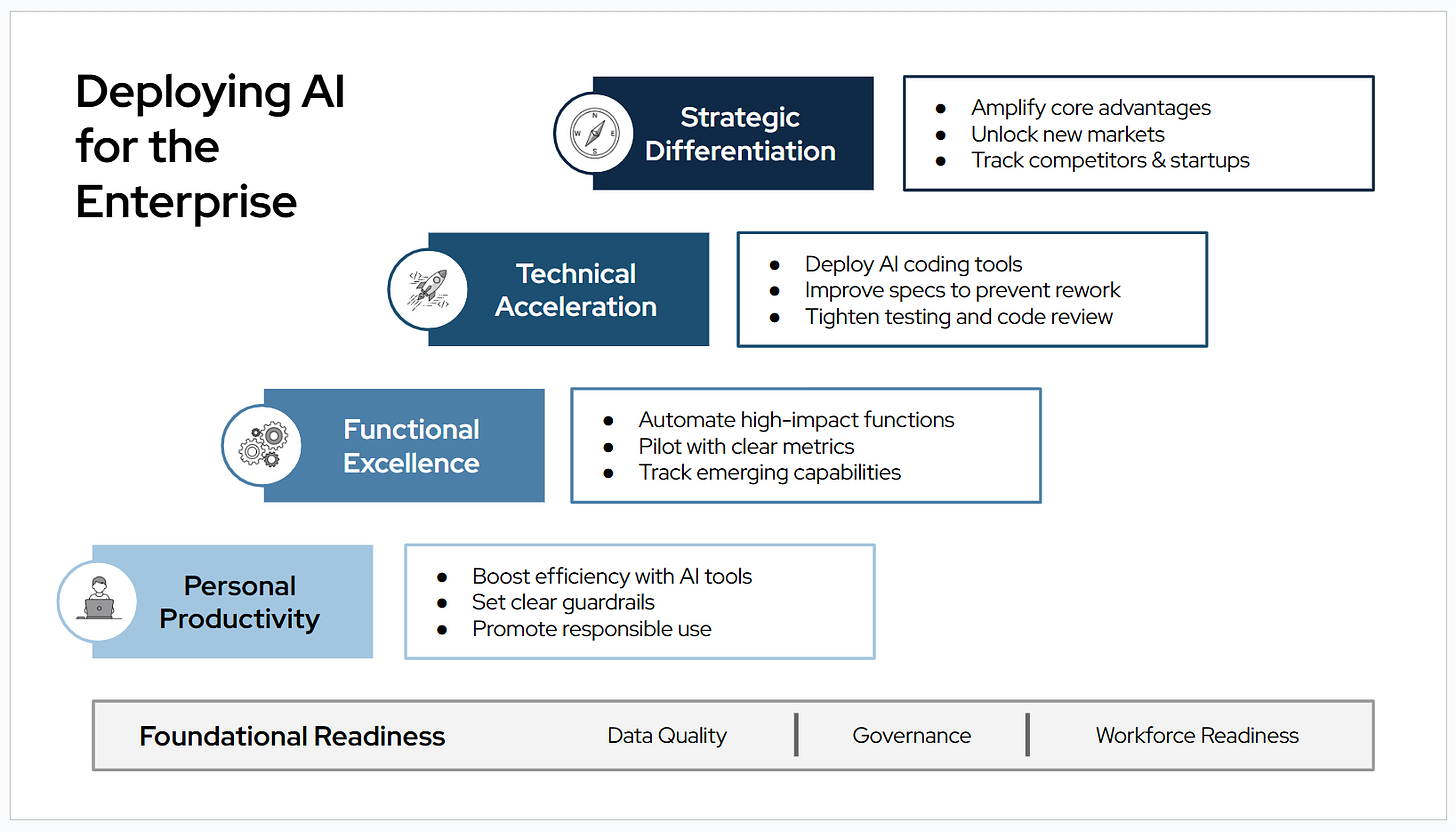

Personal productivity: The Rise of Shadow AI

Mark’s team rolled out Microsoft Copilot across the company in January. They did it the ‘right’ way, with training sessions, responsible use guidelines and department champions.

Most employees use Copilot, but ChatGPT traffic has increased 5x this year. Mark asked a friend in sales about it. He chuckled. “Copilot’s good for recording meetings and writing emails, but it doesn’t even have the latest information on our products. I can ask ChatGPT anything.”

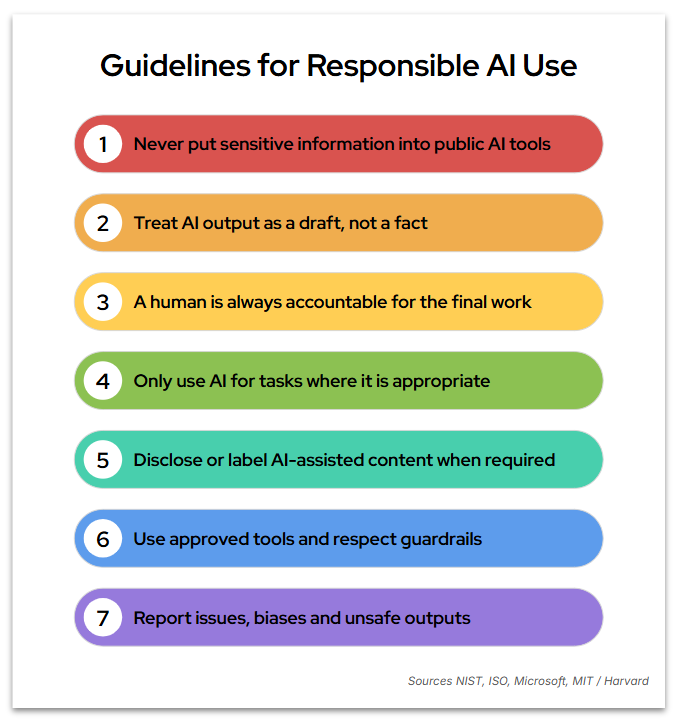

IT has good reason to be cautious. In 2023, Samsung employees exposed confidential designs in ChatGPT conversations, and the company banned it the following week. Incidents like this have made companies paranoid about data leaks, and many overcorrected, hobbling tools by restricting access to internal data and the internet.

This approach typically backfires. A recent survey found 59% of employees use ‘Shadow AI’ tools, and nearly half would keep using them even if banned completely.

What Works: Shadow AI users are a goldmine! Many are so AI-native they’ll skirt corporate rules to use their own AIs. Some companies are launching “AI amnesty” programs to learn from them and improve their official tools.

The best strategy is risk-based access. Many use cases like research, ideation and writing feedback contain little risk. Top companies like PwC, HubSpot and Capital One give employees’ AI tools full data access, with exceptions only for sensitive information and usage guidelines.

Functional Excellence: Pilot Paralysis

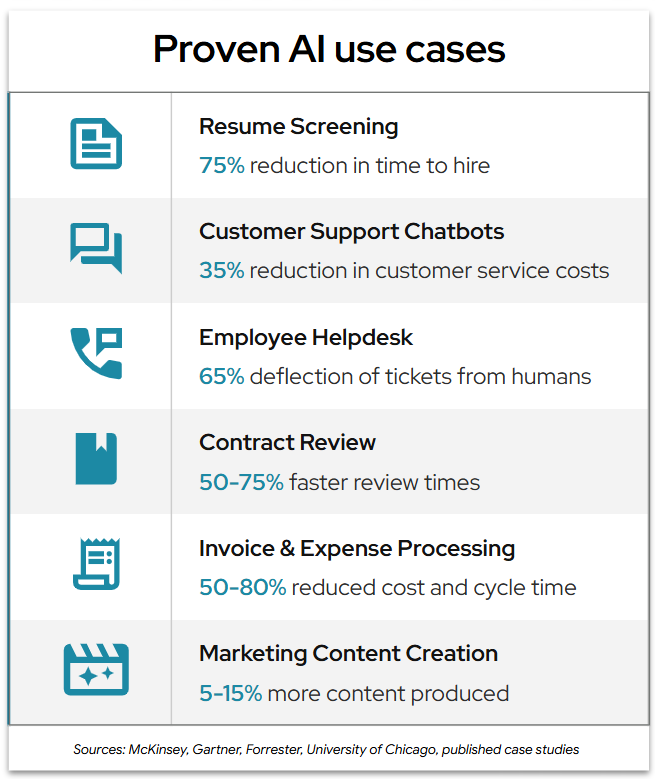

Copilot covered the basics, but Mark’s team struggles with where to go next. Vendors bombard him with pitches for AI-enabled solutions that seem impressive but are unproven. The avalanche creates decision fatigue, and pilots become the default answer. Pilots are great for learning, but it’s easy to get stuck testing everything and deploying nothing.

What Works: In a period of mounting choices, effective piloting is a competitive advantage. Companies do this through repeatable testing processes with dedicated sandbox environments, clear success metrics and a dedicated team. The best ones even make pilot velocity a metric to track and improve.

At the same time, skip the pilots and deploy proven AI solutions like HR resume screening, contract review and email generation that are already deployed at thousands of companies. Extended pilots for these are procrastination dressed up as due diligence.

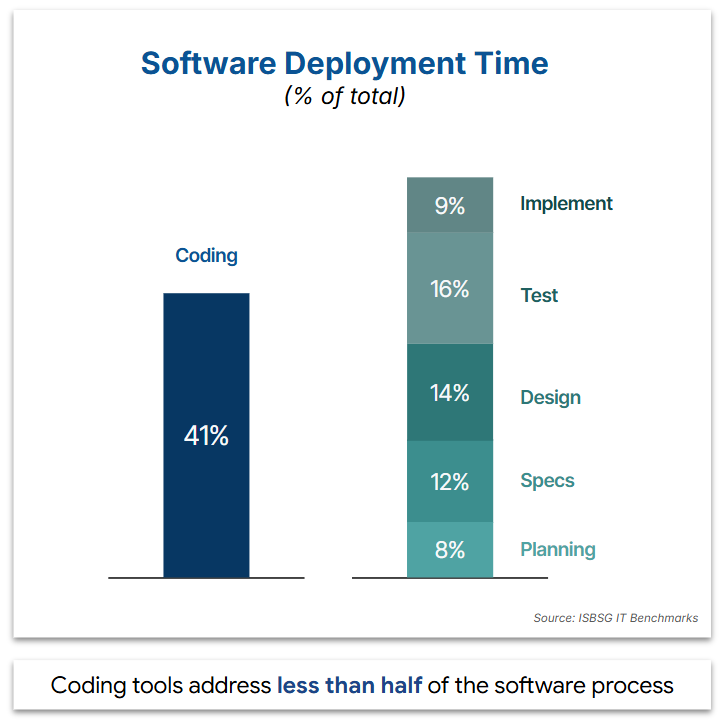

Technical Acceleration: The 50% Myth

In Mark’s next 1-1 with his VP, she puts him on the spot: “Mark, explain something to me. We bought Claude Code for the dev team. They’re supposed to be 50% faster now. Why doesn’t our 2026 roadmap reflect that?”

The answer is that coding is only 40 percent of a software project. The other 60% is spent on requirements, design and testing, which doesn’t speed up just because coding does.

What Works: AI coding tools like Cursor and Claude Code are a revelation. Beyond speed, developers using them are over three times happier. But deploying them well requires discipline, including human reviews, extra testing, and vulnerability scanning.

Being 40% faster at building the wrong thing is still a waste of time! AI can help get requirements right by analyzing support tickets for hidden themes and checking meeting transcripts against design docs to catch gaps.

Strategic Differentiation: Remember Chegg?

One of Mark’s product managers forwards him a demo video from a competitor’s launch. Their product has similar features, but with AI embedded throughout. Mark’s stomach drops. He’d heard of this company but thought it was vaporware.

Mark isn’t paranoid. Remember Chegg? Its main business was selling expert Q&A to help college students with homework. ChatGPT offered the same thing, but cheaper, fully customized and on-demand. Chegg’s business evaporated, and their stock has dropped 99%.

Mark’s team delivered valuable solutions in 2025, all focused on efficiency. 10% productivity gains or 25% fewer customer service tickets are like corporate dopamine: measurable wins but distracting from where AI will disrupt your entire industry.

A positive example is John Deere. Its Precision Agriculture strategy uses GPS, cameras and AI for precision seed planting and targeted pesticide spraying, cutting costs by 50% and improving yields. It’s grown into a data platform for farmers and a basis for self-driving tractors that can work 24x7 during peak periods.

What Works: Leaders should review their own offerings with an AI lens. Where are we vulnerable to disruption from competitors offering our products cheaper or for free? And where can we use AI to build features our customers want but we can’t offer?

Data Foundations: Why the Chatbot Failed

The low point of Mark’s year was a customer service chatbot project. It seemed straightforward, with lots of successes across the industry. But the early version acted…weird. It was often defensive, and escalated even simple issues to human operators. They dug in and realized that logs from “normal” calls older than 30 days were regularly purged, so the chatbot was trained primarily on complaints. This caused the agent to treat every customer as an angry user. They paused the project until they could collect balanced training data.

Data quality is AI’s Achilles heel. Enterprise data is often biased, fragmented or just plain missing. It’s also frequently mislabeled: a customer saying “I don’t want a refund, I want a replacement“ might be tagged as a refund request. It’s especially hard to detect these problems since AI models speak with confidence even when giving completely wrong answers.

What Works: Make data quality a mandatory step before training any AI model, and regularly audit data sources for bias, gaps, and labeling errors. Hire or train specialists who understand both the business and AI data needs. Establish cross-functional ownership so teams develop data standards instead of operating in silos.

Data quality investments are a tough sell without a clear ROI. Two approaches that work: frame it as AI infrastructure to enable multiple projects or add a data quality line item into each project’s budget.

The Mandate

Mark walks out of his board meeting. His VP gives him a nod. The activist investor pushed hard, but they had answers.

The real win wasn’t the presentation; it was the mandate they won: Mark’s December fire drill is now a fully funded 2026 roadmap.

He opens his laptop and starts looking at New Year’s cabin rentals.

Dad joke: Mark asked his new AI Agent to make him coffee. It refused. Not enough grounds. 🤦 😂

Thanks for reading!

If you enjoyed this edition, share it with someone who wants to avoid their own AI firedrill.