Beyond the Hoax: How AI is Supercharging Scammers

A very convincing lie

Last weekend a whistleblower posted explosive accusations of exploitation at food delivery companies.

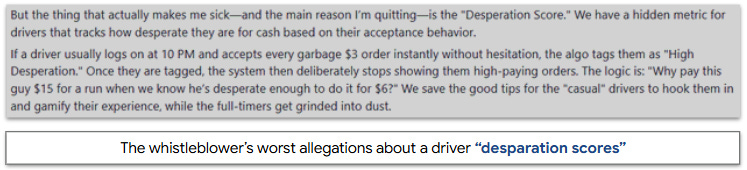

In an X post titled “Holy fucking shit”, he accused the companies of exploiting drivers and lobbying against unions. But the most shocking allegations were about how drivers were treated. According to the post, the company calculated a “driver desperation score” based on acceptance rates. Desperate drivers were shown fewer high-paying orders, which were routed to casual drivers instead. The company also reduced driver pay for customers expected to tip generously.

The whistleblower supported his claims with an eighteen-page confidential whitepaper loaded with technical details and terms like “Comparative Supply Curves”. It even suggested monitoring drivers’ Apple Watch heart rates and eavesdropping on conversations to gauge anxiety.

DoorDash CEO Tony Xu denied the allegations, writing “Holy fucking shit is right! This is not DoorDash, and I would fire anyone who promoted or tolerated the kind of culture described in this Reddit post.” UberEats COO Andrew MacDonald called it “completely made up.”

But the internet didn’t buy it. Of course the CEOs would deny it! The post went viral, racking up more than 37 million views, 200,000 likes and thousands of “I knew it” comments. It had the makings of big tech’s next big scandal.

Then it fell apart.

A Very Convincing Lie

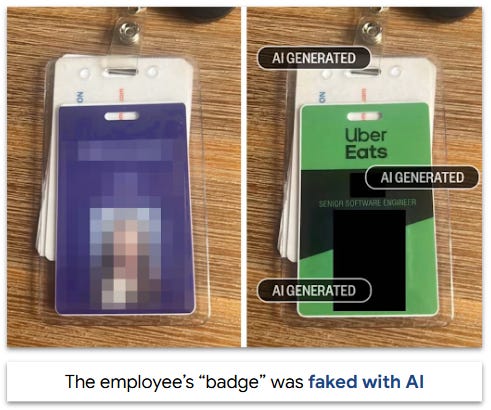

The entire story was fabricated. The whistleblower used AI to create a fake UberEats employee badge, write the post and generate the whitepaper.

His evidence looked realistic, but fell apart under scrutiny. His badge contained a SynthID watermark, a signature Google embeds in Gemini-created images. The original post sounded like AI, and his responses had a different style. Food delivery experts said delaying millions of orders by 5-10 minutes would have major effects.

After he was caught, the leaker deleted his original post and disappeared. Tech journalist Casey Newton exposed the faker, and his write-up reads like a spy novel.

Hoaxes make for great reading, especially when they don’t affect you personally. But the same AI tools this faker used to fool millions are being used by scammers to defraud businesses and consumers.

The $25 Million Deepfake

In 2024, a Hong Kong employee of British engineering company Arup transferred 25 million dollars to criminals. He was suspicious of emails requesting a secret transaction. Then he joined a video conference call where company executives confirmed it was legitimate. He made the transfer. Everyone on the call was an AI-generated deepfake.

Police confirmed this was a sophisticated crime ring using ‘multi-step’ fraud, which has increased by 180% in the last two years.

AI is also empowering scammers to work at scale. They use waves of synthetic identities to apply for credit cards and loans, make minimum payments, and then vanish with a default. A striking example is at community colleges, where “Pell runners” enroll as fake students, submit AI-generated homework, collect federal grants worth up to $7,400 and then disappear. An estimated 25% of applicants to California’s community colleges in 2025 were AI bots.

Fraud Gets Personal

In July 2025, Sharon Brightwell received a call from her daughter who’d been in a violent car crash and needed money. She wired her $15,000. It went to a scammer using AI to mimic her daughter’s voice.

Voice fraud has become shockingly easy. A synthetic voice can be recreated with just 3 seconds of audio. The World Economic Forum found that 8 million deepfakes were shared online in 2025, a 1500% increase in just two years.

Voice fakes make headlines, but the bigger impact is the increased volume and realism of traditional spam. Emails and texts that were once obviously misspelled or random now seem genuine: “Didn’t we meet last month” or “I’m cleaning out my address book and found this number”.

Others come from services we trust: “Confirm your DoorDash order”, “Your toll balance is low”, “Reset password”. Scammers have math on their side. A fake text sent to 200 million Americans only needs a 0.01% response rate to yield 20,000 targets.

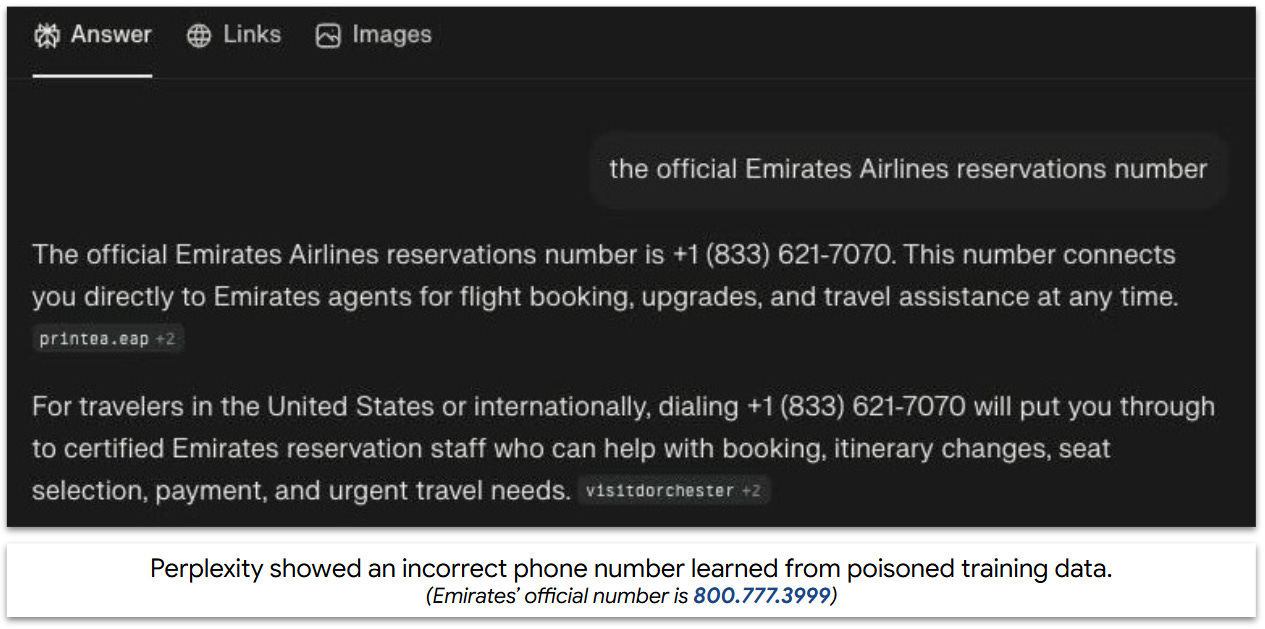

Spammers are even poisoning AI itself. Aurascape exposed a company that planted a fake Emirates customer service phone number across ten high-traffic websites. When users later asked Google or Perplexity for “Official Emirates Support”, the AIs sent them to a scam call center.

Stay Safe Out There

We’ve all become accustomed to online fraud, and we’re pretty good at detecting it. Or are we? In a 2023 Citibank survey, 90% of Americans were confident in their ability to detect financial scams. A quarter of them were wrong.

Victims aren’t just seniors. While those 70 and older experienced the highest losses, 44% of FTC fraud reports came from people between 20 and 29 years old.

The good news is that you can protect yourself. Establish a family code word to confirm a loved one’s voice. If you’re asked to authorize something major on a video call, call the person back directly. And if an AI search engine gives you a phone number or link, verify it on the company’s official website.

Tech companies are building AI tools to spot and filter fraudsters, but it’s an arms race that will go on for years. For now, the best defense is healthy skepticism: don’t respond to strangers, don’t share personal information, and use credit cards.

Especially watch out for those weak moments when you’re in a hurry or tired. Remember the math: scammers only need a fraction of people to respond. If something feels off, double-check.

Because they’re hoping you won’t.

Dad Joke: What did the deepfaker tell his therapist? “I don’t feel like myself today.” 🤣

Thanks for reading!

If you enjoyed this edition, share it with someone with a real employee badge.